News Story

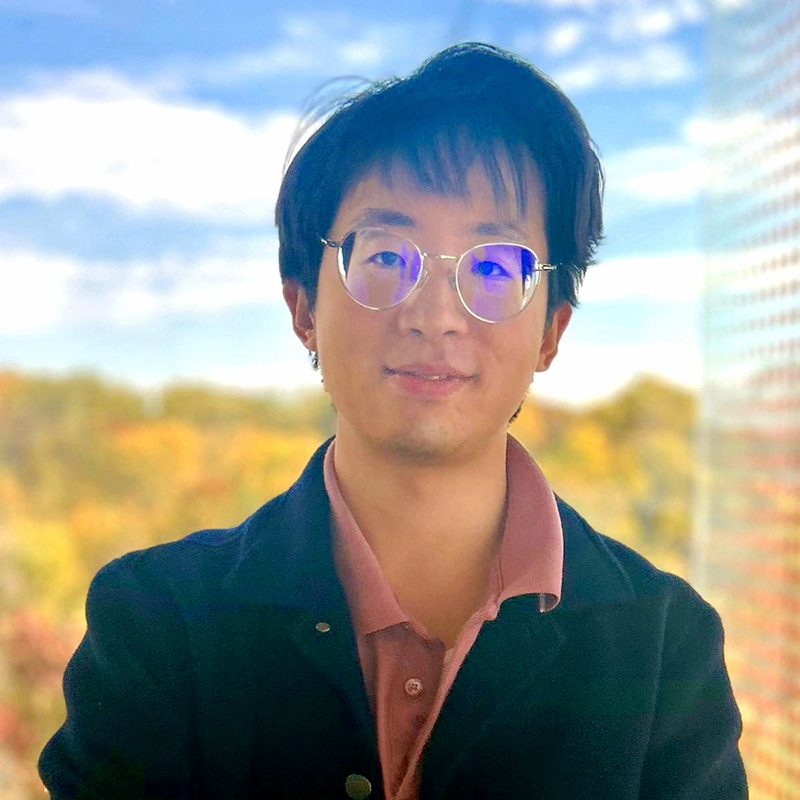

Uttaran Bhattacharya receives Adobe Research Fellowship

A University of Maryland doctoral student in computer science recently received funding from Adobe Research to continue his innovative work in developing systems and devices that can recognize, interpret, process and simulate human actions and emotions.

Uttaran Bhattacharya was one of only 10 graduate students in North America to receive an Adobe Research Fellowship for 2021. The fellowship, starting this August and lasting for one academic year, provides a $10,000 award and an opportunity for an internship at Adobe.

Bhattacharya’s research interests are focused on affective computing and modeling human motions. The goal, he says, is to create robust, emotion-aware artificial intelligence systems that can respond to the emotions of human users, blurring the lines of human-machine interfaces for social tasks such as conversations, interactions, teaching and building an experience in the digital world.

To this end, he is developing methods to automatically detect perceived emotions from videos and motion-captured data of non-verbal body expressions such as gaits and gestures, as well as methods to automatically generate virtual agents capable of expressing different emotions via their gaits and gestures. He won a Best Conference Paper Award at the 2021 IEEE Virtual Reality Conference for "Text2Gestures: A Transformer-Based Network for Generating Emotive Body Gestures for Virtual Agents."

Bhattacharya is advised at UMD by ISR-affiliated Distinguished University Professor Dinesh Manocha (CS/ECE/UMIACS).

In a nomination letter for the Adobe fellowship, Manocha noted Bhattacharya’s excellent research involving affective computing, virtual reality and autonomous driving, and his contributions to numerous academic papers on topics based in computer vision, machine learning, and physics-based simulations.

Bhattacharya is currently in the process of brainstorming projects to work on remotely with Adobe. He says that work will likely involve creating tools to automatically generate high-resolution content of human activities based on the understanding of context and concepts from the input descriptions in the form of text, images or other forms of media, and analyzing the affect and the sentiments in the input descriptions.

Bhattacharya notes that an added challenge will be to achieve this using data-efficient machine learning approaches such as weakly-supervised or self-supervised learning.

He recently completed an internship with Adobe Research that was separate from the fellowship award. Working remotely with a group based in San Jose, California, Bhattacharya helped design a system for detecting highlights of human activities from human-centric videos.

“I am honored and humbled to have had the opportunity to previously work with Adobe Research, and am grateful to receive the recent fellowship award,” Bhattacharya says. “It provides me a great opportunity to make meaningful contributions to Adobe’s amazing research ecosystem and lead the change in bringing our virtual and digital lives closer to reality.”

—We thank Melissa Brachfeld of UMIACS for this story

Published April 21, 2021