News Story

Helping robots navigate to a target, around obstacles and without a map

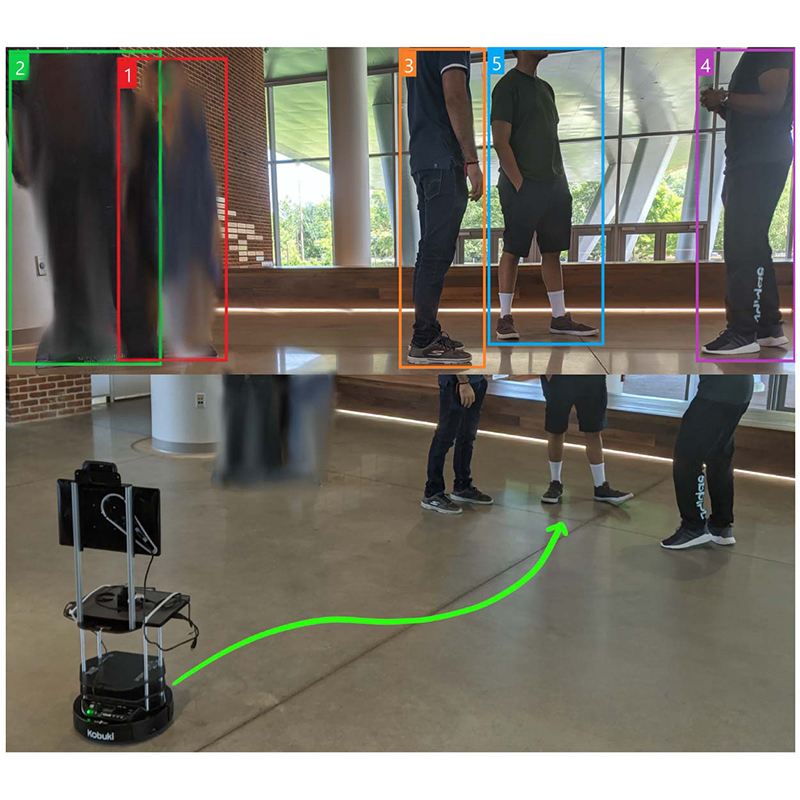

Robot image-goal navigation can be challenging. In the photo at left, the navigation target is far and out of sight of the agent. In the photo at right, to reach the navigation target, the agent is required to move around several obstacles. (Fig. 1 from the paper)

Target-driven navigation in unstructured environments has long been a challenging problem in robotics—especially in settings where robots need to proceed with only a goal image and no map supplying accurate target position information. If the robot needs to navigate over a great distance or through crowded scenarios, it also needs to be able to learn an effective exploration strategy and be able to avoid collisions.

When robots are able to safely bypass obstacles and efficiently navigate to specified goals without a preset map, they will be far more useful in surveillance, inspection, delivery, and cleaning, among other applications. However, although the navigation problem has been well studied in robotics and related areas for several decades, solutions have been elusive.

Image-Goal Navigation in Complex Environments via Modular Learning, published in the July 2022 IEEE Robotics and Automation Letters, offers a promising strategy. The research was conducted by Qiaoyun Wu (School of Artificial Intelligence, Anhui University, China), Jun Wang and Xiaoxi Gong (College of Mechanical and Electrical Engineering, Nanjing University of Aeronautics and Astronautics, China) and ISR-affiliated Professor Dinesh Manocha (ECE/UMIACS) and his Ph.D. student Jing Liang (CS).

Unlike most existing image-goal navigation approaches that use end-to-end learning to tackle this problem, the researchers’ hierarchical decomposition framework incorporates a four-module learning approach that decouples navigation goal planning, collision avoidance, and navigation-ending prediction, enabling more concentrated learning for each part. The first module maintains a navigation obstacle map. The second periodically predicts a long-term goal on a real-time map, which can convert an image-goal navigation task to several point-goal navigation tasks. The third module plans collision-free command sets for navigating to these long-term goals. The final module stops the robot properly near the goal image.

The robot is driven forward towards the goal image by combining long-range planning with local motion control. This also helps the robot avoid various static and dynamic obstacles in the scenes.

The four modules are separately designed and maintained, which cuts down search time during navigation and improves generalizations to previously unseen real scenes.

The method was evaluated in both a simulator and in the real world with a mobile robot. In simulation the approach significantly cuts the navigation search time to state-of-the-art performances. It also easily transfers and generalizes from simulation to real world crowded scenarios, where the method attains at least a 17% increase in navigation success and a 23% decrease in navigation collision compared with existing methods.

Published June 22, 2022