News Story

Improving speech intelligibility testing with new EEG methods

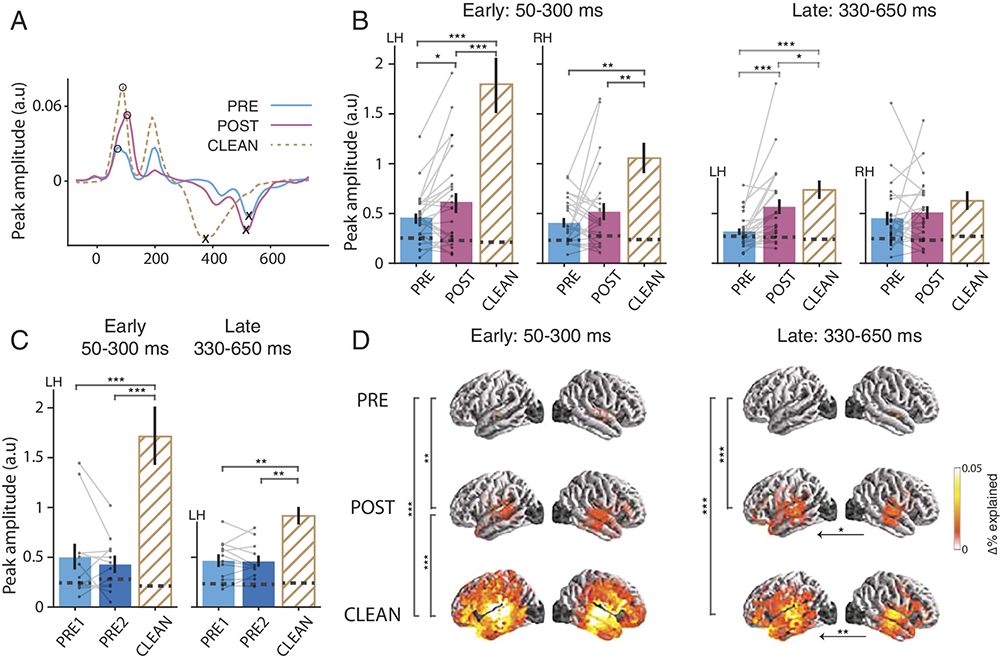

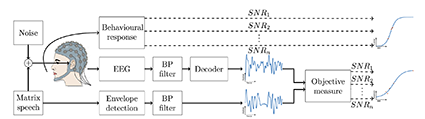

Figure 1 from the paper. Experimental setup: Flemish Matrix sentences were used to behaviorally measure speech intelligibility. In the EEG experiment, the researchers presented stimuli from the same Matrix corpus while measuring the EEG. By correlating the speech envelopes from the Matrix and the envelopes decoded from the EEG, the researchers obtained an objective measure.

Hearing someone talking and understanding what they are saying are two different things. If your friend is talking to you from another room, it can be very easy to hear that they are saying something, yet difficult to understand what they are saying. People who use hearing aids often say the devices allow them to hear, but not always to understand.

Current tests used to measure hearing and understanding are two different things as well. Hearing tests are well-established and reliable, while tests that measure understanding are… not so much.

In a new paper published in the Journal of the Association for Research in Otolaryngology, “Speech Intelligibility Predicted from Neural Entrainment of the Speech Envelope,” Professor Tom Francart, KU Leuven, Belgium; Professor Jonathan Simon, University of Maryland; and their colleagues show that by appropriating some of the same methods and technology already used in hearing tests, the accuracy of intelligibility tests can be significantly improved.

Hearing tests are typically performed in one of two ways. A person puts on headphones and indicates to the test operator whenever they hear a tone. Or a cap made up of a set of electroencephalogram (EEG) electrodes can be placed on the person’s head, and it will directly and automatically record brainwaves while they listen to the sounds. Hearing tests given to babies shortly after they are born often use this method. Electrodes placed on the baby's head measure whether brainwaves occur in response to sound exposure.

The big advantage is that the EEG is an objective measure, and the person undergoing the test does not have to do anything for it to work. In fact, babies often sleep through the test.

Tests used to measure how well speech is understood are more problematic. Typically, the test requires a person to identify speech by repeating back to the tester what they think they have just heard. Because this test requires the person’s participation, there are a number of ways it can go wrong. For example, the person’s auditory prosthesis (eg. hearing aid or cochlear implant) may not fit correctly. Perhaps the person has impaired cognitive function, such as a memory problem. They may not fully understand the language being spoken. Or the person may not be motivated or attentive to the task.

In their research, Francart, Simon and their colleagues showed EEG-based testing also could be used to measure speech understanding. The new objective method they developed not only is accurate, but also does not depend on the person’s participation.

“This means the test will work regardless of the listener’s state of mind,” Simon explains. “We don’t want a test that would fail just because they stopped paying attention.”

Instead of presenting the person with tonal sounds while they are wearing the electrodes (as in the hearing test), the researchers had them listen to a sample of speech.

“With 64 electrodes, we measure the brain waves when someone listens to a sentence,” says Francart. “We filter out the brainwaves that aren’t linked to the speech sound as much as possible. We compare the remaining signal with the original sentence.”

The way the brain processes speech can be inferred from the correlation between these two signals. If there is sufficient similarity, it means that the person not only has heard something, but also has properly understood the message, Francart says.

The new test promises to be much better at determining speech intelligibility than the current “behaviorally” measured test. It is both automatic and objective, and it can provide more valuable information. This could lead to better diagnoses in patients with speech comprehension issues.

It will be especially useful for people struggling with cognitive issues or those who cannot provide feedback. In addition, the test could help people who have just received a hearing aid and may need it adjusted. Some day it could help to automatically fit hearing aids.

“At the moment, hearing aids ensure that sounds are audible, but not necessarily intelligible,” Francart says. “With built-in electrodes, the device could measure how well the speech is understood. Then it could determine whether adjustments are needed, for example, for something like background noise.”

The test also could be used in developing new “smart” hearing devices that are able to continuously adapt to individual users in specific and changing environments.

According to Simon, “Adjustments could be made automatically, based on how successfully the brain is able to turn the processed speech sound into an understandable speech sound.”

- - - - - - - - - -

Citation. “Speech Intelligibility Predicted from Neural Entrainment of the Speech Envelope,” by Jonas Vanthornhout, Lien Decruy, Jan Wouters, Jonathan Z. Simon and Tom Francart. Journal of the Association for Research in Otolaryngology, published online Feb. 20, 2018. DOI: 10.1007/s10162-018-0654-z.

Funding. This research was funded by the European Research Council (GA 637424), the Research Foundation—Flanders (FWO) and KU Leuven; and by the National Institutes of Health (R01-DC-014085).

Tom Francart is a professor in the Department of Neurosciences, Experimental Oto-Rhino-Laryngology Research Group at KU Leuven, Belgium.

Jonathan Simon is a professor with a joint appointment in the Department of Biology, the Department of Electrical and Computer Engineering, and the Institute for Systems Research at the University of Maryland. He is also a member of the university's Brain and Behavior Initiative.

Published March 7, 2018