News Story

UMD to Lead New $20M NSF Institute for Trustworthy AI in Law and Society

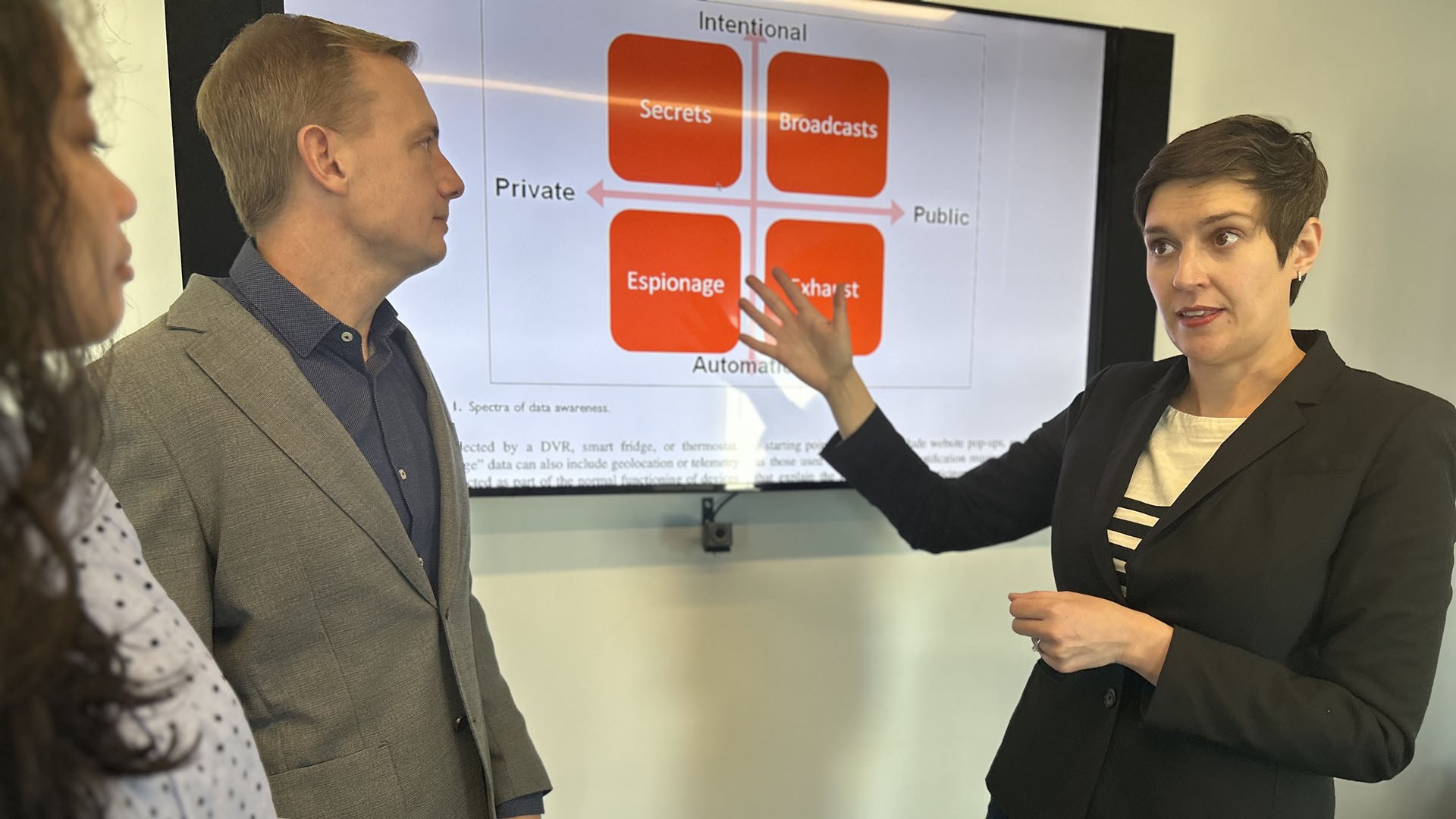

UMD faculty researchers are leading a new $20 million NSF Institute for Trustworthy AI in Law and Society. (Below, from left) University of Maryland doctoral student Lovely-Frances Domingo, Professor Hal Daumé III and Associate Professor Katie Shilton discuss Shilton’s work on ethics and policy for the design of information technologies.

Illustration by Alamy

By Tom Ventsias

The University of Maryland will lead a multi-institutional effort supported by the National Science Foundation (NSF) that will develop new artificial intelligence (AI) technologies designed to promote trust and mitigate risks, while simultaneously empowering and educating a public increasingly fascinated by the recent rise of eerily human-seeming applications like ChatGPT.

Announced today, the NSF Institute for Trustworthy AI in Law & Society (TRAILS) unites specialists in AI and machine learning with social scientists, legal scholars, educators and public policy experts. The team will work with communities affected by the technology, private industry and the federal government to determine what trust in AI would look like, how to develop technical solutions for AI that actually can be trusted, and which policy models best create and sustain trust.

Funded by a $20 million award from NSF, the new institute is expected to transform the practice of AI development from one driven primarily by technological innovation to one that is driven by ethics, human rights, and input and feedback from communities whose voices have previously been marginalized.

“As artificial intelligence continues to grow exponentially, we must embrace its potential for helping to solve the grand challenges of our time, as well as ensure that it is used both ethically and responsibly,” said UMD President Darryll J. Pines. “With strong federal support, this new institute will lead in defining the science and innovation needed to harness the power of AI for the benefit of the public good and all humankind.”

In addition to UMD, TRAILS will include faculty members from George Washington University (GW) and Morgan State University, with more support coming from Cornell University, the National Institute of Standards and Technology (NIST) and private sector organizations like the Dataedx Group, Arthur AI, Checkstep, FinRegLab and Techstars.

Photo by Maria Herd

At the heart of the new institute is a consensus that AI is currently at a crossroads. AI-infused systems have great potential to enhance human capacity, increase productivity, catalyze innovation and mitigate complex problems, but today’s systems are developed and deployed in a process that is opaque and insular to the public, and therefore, often untrustworthy to those affected by the technology.

“We’ve structured our research goals to educate, learn from, recruit, retain and support communities whose voices are often not recognized in mainstream AI development,” said Hal Daumé III, a UMD professor of computer science who is lead principal investigator of the NSF award and will serve as the director of TRAILS.

Inappropriate trust in AI can result in many negative outcomes, Daumé said. People often “overtrust” AI systems to do things they’re fundamentally incapable of. This can lead to people or organizations giving up their own power to systems that are not acting in their best interest. At the same time, people can also “undertrust” AI systems, leading them to avoid using systems that could ultimately help them.

Given these conditions—and the fact that AI is increasingly being deployed in online communications, to determine health care options and offer guidelines in the criminal justice system—it has become urgent to ensure that people’s trust in AI systems matches those same systems’ level of trustworthiness.

TRAILS has identified four key research thrusts to promote the development of AI systems that can earn the public’s trust through broader participation in the AI ecosystem.

The first, known as participatory AI, advocates involving human stakeholders in the development, deployment and use of these systems to align with the values and interests of diverse groups of people, rather than being controlled by a few experts or businesspeople. The effort will be led by Katie Shilton, an associate professor in UMD’s College of Information Studies who specializes in ethics and sociotechnical systems. The second research component, meanwhile, aims to develop advanced machine learning algorithms that reflect the values and interests of the relevant stakeholders and is led by Tom Goldstein, a UMD associate professor of computer science.

Daumé, Shilton and Goldstein all have appointments in the University of Maryland Institute for Advanced Computer Studies, which is providing administrative and technical support for TRAILS.

David Broniatowski, an associate professor of engineering management and systems engineering at GW, will lead the institute’s third research thrust: evaluating how people make sense of the AI systems that are developed. Susan Ariel Aaronson, a research professor of international affairs at GW, will use her expertise in data-driven change and international data governance to lead the institute’s fourth thrust: participatory governance and trust.

Virginia Byrne, an assistant professor of higher education and student affairs at Morgan State, will lead community-driven projects related to the interplay between AI and education. The TRAILS team will rely heavily on Morgan State’s leadership—as Maryland’s preeminent public urban research university—in conducting rigorous, participatory community-based research with broad societal impacts, Daumé said.

Federal officials at NIST will collaborate with TRAILS in the development of meaningful measures, benchmarks, test beds and certification methods—particularly as they apply to topics essential to trust and trustworthiness such as safety, fairness, privacy and transparency.

“The ability to measure AI system trustworthiness and its impacts on individuals, communities and society is limited,” said Under Secretary of Commerce for Standards and Technology and NIST Director Laurie E. Locascio, former University of Maryland vice president for research. “TRAILS can help advance our understanding of the foundations of trustworthy AI, ethical and societal considerations of AI, and how to build systems that are trusted by the people who use and are affected by them.”

Maryland’s U.S. Sen. Chris Van Hollen said the state is at the forefront of U.S. scientific innovation, thanks to a talented workforce, top-tier universities and federal partnerships.

“This National Science Foundation award for the University of Maryland—in coordination with other Maryland-based research institutions including Morgan State University and NIST—will promote ethical and responsible AI development, with the goal of helping us harness the benefits of this powerful emerging technology while limiting the potential risks it poses,” Van Hollen said. “This investment entrusts Maryland with a critical priority for our shared future, recognizing the unparalleled ingenuity and world-class reputation of our institutions.”

Thank you to Tom Ventsias for the article. Read the original version of this story on the Maryland Today website.

Published May 12, 2023